277 lines

20 KiB

Markdown

277 lines

20 KiB

Markdown

# Nectar Reference Compiler Source Documentation

|

|

|

|

When writing a program, I usually make the most primitive and smallest code I can that does the job. If it turns out I miscalculated the complexity, or I must add some feature that isn't compatible with the codebase, I'll obviously have to refactor it. Still, I've been programming this way for probably my entire life.

|

|

|

|

That being said, if you know this compiler took since 2019 to get to its current state, you will correctly guess that I DO NOT KNOW WHAT I AM DOING. Compiler literature, and online discussion, is abstract to the point where it is not useful for real-world processors. Imagine how long it took me to realize real-world IRs are actually not at all generic, and are actually quite close to their target architectures. As a result, much of what you see in the source is the result of a lot of experimentation. There's definitely better ways to do the things I show here, but I figured it's better to have at least some resource on how a "real" compiler works.

|

|

|

|

The core idea behind the compiler is to progressively iterate through the AST, turning it into a more primitive form step by step. Once this primitivization ends, the code generator is given the code in a form it will understand. Doing it this way is necessary because machine code itself is primitive, and instructions typically have 0-3 operands. Thanks to both this, and Nectar itself being highly low-level, the need for an IR disappears. On the other hand, making sure the AST is in a correct state between steps is the prime source of bugs.

|

|

|

|

Currently the compiler is designed with only i386+ processors in mind. I intend to add support for i286- and other exotic processors, but I honestly don't see it happening ever, especially if this remains a solo project. More RISC architectures with regular register files will be easier to add support for, but they're also the kind for which the advantages of this programming language aren't worth the squeeze.

|

|

|

|

## AST structure

|

|

|

|

Starting with a Nectar source file, the compiler begins with the two common passes: lexing and parsing. Parsing exploits Nectar's syntax quirks, and may jump back and forth multiple times to fully parse a source file. This is necessary to avoid having to forward declare items. At the end, parsing returns what is called an AST in the source, although formally speaking the term is incorrectly used.

|

|

|

|

An AST node may not be shared by multiple parent nodes. Also, the internal Nectar AST does not have scaling for pointer arithmetic; all pointers behave as `u8*`. This is the first of many simplifications.

|

|

|

|

Each block of code is called a "chunk", likely a term I took from Lua. Chunks may contain one another; the least deep one within a function is called the top-level chunk (very important). Top-level chunks may contain other top-level chunks, because user-defined functions are within the "global scope", which is considered a function in itself. After all, nothing stops you from directly inserting instructions in the `.text` section of an executable, without attaching it to a label.

|

|

|

|

During parsing, a tree of maps is used to handle scopes and variable declarations, called `VarTable`. Its entries are of type `VarTableEntry` (VTE), which may be of kind `VAR`, `SYMBOL` (global variables) or `TYPE` (type-system entries). Shadowing in vartables is allowed, like in Nectar itself.

|

|

|

|

The top-level chunk keeps a list of variables within its `ASTChunk` structure. After a chunk is finished parsing, all local variables in the current `VarTable` are added to its top-level chunk's variable list. Names may conflict, but at this point they're no longer important. Also worth mentioning is that this flat list contains `VarTableEntry` structs, even though `VarTable`s are now irrelevant. Said VTEs are all of type `VAR`; the rest are ignored because they're not subject to coloring.

|

|

|

|

There's enough types of passes to push us to have a generic way to invoke the visitor pattern on the AST. Because passes may do many different things to the AST, including modify it, the definition of a generic visitor is very broad. Most functionality is unused by each pass, but all of it is needed.

|

|

|

|

void generic_visitor(AST **nptr, AST *stmt, AST *stmtPrev, AST *chu, AST *tlc, void *ud, void(*handler)(AST**, AST*, AST*, AST*, AST*, void*));

|

|

|

|

`*nptr` is the actual node that is currently being visited. It is behind an additional indirection, because the node may be replaced by another.

|

|

|

|

If the current node is within a statement (most are), `stmt` is equal to that statement. `stmtPrev` is the previous statement. This is necessary for patching in the linked list of statements within a chunk during modification passes. If there is no previous statement, then the head pointer of the singly-linked list must be patched through the `chu` node. The `tlc` is the top-level chunk, which may be equal to `chu`.

|

|

|

|

## Pre-dumbification

|

|

|

|

Before dumbification we need to make sure the code at least matches the semantics of the x86 architecture.

|

|

|

|

For one thing, arguments are not magically loaded with the correct values. The `pre_dumb_visitor` pass inserts assignment statements at the beginning of the function to load the values from the stack, as per the C ABI.

|

|

|

|

Then we have structures which, of course, x86 doesn't support. There must be no by-value use of a structure anywhere. The `decompose_symbol_record_field_access` pass decomposes all references to a structure's field to pointer-like accesses. For example, `a.b` becomes `*((&a + x) as B*)`, where `B` is the type of `a.b`, and `x` is the memory offset of the field `a.b`. The same pattern is specially recognized by the code generator for outputting an x86 address mode like `[eax + x]`.

|

|

|

|

Afterward, local structs must be either spilled to global memory or to the stack. They may also be decomposed in some cases, but that is currently unsupported. This is done by `spill2stack_visitor`, with `ast_spill_to_stack` as the public interface. Since spilling causes the stack frame of the function to grow, all references to the stack must be updated accordingly. This is also done by `spill2stack_visitor`, and in such a way so as to not require more dumbification.

|

|

|

|

Now a deeper structure access like `a.b.c` (`*((&(*((&a + x) as B*)) + y) as C*)`) is not recognized by the code generator. We must rely on optimizations passes that are guaranteed to happen: `*&p` and `&*p` should become `p`, `p as A* as B*` should become `p as B*` and `(p + x) as A* + y` should become `(p + (x + y)) as A*`. These are done by `denoop_visitor`.

|

|

|

|

## Dumbification

|

|

|

|

The idea of turning the AST progressively primitive is called "dumbification" in the source. The most simple example would be the following:

|

|

|

|

a = -b

|

|

|

|

which should become

|

|

|

|

a = b

|

|

a = -a

|

|

|

|

Because the `neg` instruction on x86 is single-operand. If targeting an arch like MIPS, this specific dumbification would not be used, because one can use the 3-operand `subu` with the zero register.

|

|

|

|

Another rule is to extract function arguments and place them into local variables, but *only* if they do not form an x86 operand (for example `5` is ok because `push 5` exists).

|

|

|

|

Dumbification must be repeated until there are no more changes. The dumbification part of the source is responsible for making sure the resulting AST is "trivially compilable" to the machine code. For example, `a = a + b` is trivially compilable, because we have the `add reg, reg` instruction. What is trivially compilable depends on which registers are used in the end (a variable colored as `edi`, `esi` or `ebp` cannot be used for 8-bit stores/loads). These details are not taken into account by dumbification.

|

|

|

|

Putting all of this together, here is an example of nctref's dumbification of the following Fibonacci implementation, as of writing. Here is the main Nectar source code:

|

|

|

|

fibonacci: u32(u32 n) -> {

|

|

if(n <= 1) {

|

|

return n;

|

|

}

|

|

return fibonacci(n - 1) + fibonacci(n - 2);

|

|

};

|

|

|

|

And the processed AST output by the compiler:

|

|

|

|

u32(u32) fibonacci: u32(u32) {

|

|

n = *(@stack + 4);

|

|

if((n <= 1)) {

|

|

return n;

|

|

}

|

|

$dumb2 = n;

|

|

$dumb2 = ($dumb2 - 1);

|

|

$dumb0 = fibonacci($dumb2);

|

|

$dumb3 = n;

|

|

$dumb3 = ($dumb3 - 2);

|

|

$dumb1 = fibonacci($dumb3);

|

|

$dumb0 = ($dumb0 + $dumb1);

|

|

return $dumb0;

|

|

};

|

|

|

|

`@stack` is an internal variable that points to the beginning of the current stack frame.

|

|

|

|

NOTE: Later someone called this normalization, which is a much less stupid word than dumbification, and I'm shocked I never thought of it myself. There's also canonicalization...

|

|

|

|

## Use-def chain

|

|

|

|

I hate these things. Another is def-use chains, but both are horribly underdocumented. Their only use in most literature is so the author can immediately move to SSA form.

|

|

|

|

For each variable, its UD chain is a list of each usage in the AST, with the corresponding potential definition of the variable at that use. For each potential definition that exists at that point, there is one UD element in the chain. If there's only one potential definition at a point, then it's definitely the true one. Users of UD chains include optimizers and codegen. The UD chains are continually regenerated when needed by using the UD visitor on the top-level chunk.

|

|

|

|

As simplest, the code `u8 x = 0;` has an empty UD-chain, because there are no uses. It's definition could even be classified as dead code.

|

|

|

|

Clearly, a definition of a variable overrides every definition before it, but that is only within the same basic block. In the following code, a variable has a single potential definition in each branch of the if statement, but afterward it will have two:

|

|

|

|

u8 x = 0; /* Potential definitions: [x = 0]

|

|

* UD-chain of x:

|

|

* empty */

|

|

if(y) {

|

|

x = 1;

|

|

f1(x); /* Potential definitions: [x = 1]

|

|

* UD-chain of x:

|

|

* - def=[x = 1], use=[f1(x)] */

|

|

} else {

|

|

x = 2;

|

|

f2(x); /* Potential definitions: [x = 2]

|

|

* UD-chain of x:

|

|

* - def=[x = 1], use=[f1(x)]

|

|

* - def=[x = 2], use=[f2(x)] */

|

|

}

|

|

f3(x); /* Potential definitions: [x = 1], [x = 2]

|

|

* UD-chain of x:

|

|

* - def=[x = 1], use=[f1(x)]

|

|

* - def=[x = 2], use=[f2(x)]

|

|

* - def=[x = 1], use=[f3(x)]

|

|

* - def=[x = 2], use=[f3(x)] */

|

|

|

|

It gets worse. Imagine the following pseudocode:

|

|

|

|

x = 0;

|

|

loop {

|

|

x = x + 1;

|

|

y = 5;

|

|

}

|

|

|

|

The UD-chain knows nothing about loops. It only cares whether something comes before or after. As is, it'll assume y is not in conflict with x, and they'll end up in the same register. Because of this, the parser must insert a so-called "loop guard", which will turn the AST into the following:

|

|

|

|

x = 0;

|

|

loop {

|

|

x = x + 1;

|

|

y = 5;

|

|

}

|

|

x;

|

|

|

|

That's one problem, but there's another:

|

|

|

|

x = 0;

|

|

loop {

|

|

f(x);

|

|

x = x + 1;

|

|

}

|

|

|

|

Despite appearing later in the source, `x = x + 1` is a potential definition for `f(x)`! This means the UD-chain generator must go through loops twice -- once with the upper definitions, and once with definitions from within the loop. Additionally, the UD-chain is assumed to be ordered by appearence in the source, so insertion in the second pass must consider that.

|

|

|

|

Now, why did I choose UD chains? Why, simplicity, obviously...

|

|

|

|

## Coloring

|

|

|

|

At this point we have a very distorted kind of Nectar AST in our function. Sure we've got blocks and other familiar things, but all variables are in a flat list. These variables are essentially the "virtual registers" you hear a lot about. Because x86 only has six general-purpose registers, we must assign each of these variables (VTEs) to a physical machine register.

|

|

|

|

This problem is a large area of study in itself, but a common approach is to imagine it as a graph coloring problem, where vertices are VTEs, and edges connect conflicting VTEs that cannot have the same color. Said edges are determined using the UD-chains of both VTEs.

|

|

|

|

The actual coloring algorithm used is Welsh-Powell, which sorts the VTEs/vertices by degree before attempting greedy coloring.

|

|

|

|

If there's more colors than there are physical registers, then we have a conflict, and must spill. There are two ways to do so: spill2var and spill2stack. The former is necessary on boundaries where suddenly a specific register/color must be used (e.g. returning in `eax`). The latter transforms every use of a local variable (`ASTExprVar` where its VTE is of type `VARTABLEENTRY_VAR`) into the form `@stack + n`.

|

|

|

|

If spill2stack is used, then CG must fail once so that dumbification can be applied again.

|

|

|

|

## Pre-coloring

|

|

|

|

I skipped forward a bit. Coloring assumes that all registers have equal importance, which is never true. A return value must be in `eax`, the remainder of division must be in `edx`, etc. In 64-bit, the index of an argument determines in which register it may end up.

|

|

|

|

The pre-coloring visitor applies said rules to the AST, setting the colors in the VTE. It is completely plausible that a conflict can occur here, too, from two variables having overlapping live ranges and the same color, but it can also be from demanding more than one color from the same variable. In the latter case, the pre-coloring visitor gives up as soon as its detected. In both cases we do spill2var, not spill2stack, because spilling to the stack doesn't solve the pre-coloring problem.

|

|

|

|

## Callee-saved pass

|

|

|

|

If a function uses a callee-saved register, these must be stored and loaded at the correct times. This is done by modifying the AST in a special pass.

|

|

|

|

Of the four currently used registers, only `ebx` is callee-saved. A random variable colored `ebx` is chosen, and is saved to/loaded from the stack. The rule is written such that dumbification isn't necessary, unlike spill2stack.

|

|

|

|

## Code generation

|

|

|

|

FINALLY. This pass doesn't use `generic_visitor`, because it may consume multiple sibling AST nodes for emitting code. At this point there's nothing arcane or obscure; the code is pretty self-explanatory.

|

|

|

|

Using the same Fibonacci example as above, this is the result.

|

|

|

|

global fibonacci

|

|

fibonacci:

|

|

mov edx, [esp + 4]

|

|

cmp edx, 1

|

|

ja .L0

|

|

mov eax, edx

|

|

ret

|

|

.L0:

|

|

mov eax, edx

|

|

dec eax

|

|

push ecx

|

|

push edx

|

|

push eax

|

|

call fibonacci

|

|

add esp, 4

|

|

pop edx

|

|

pop ecx

|

|

mov ecx, eax

|

|

mov eax, edx

|

|

sub eax, 2

|

|

push ecx

|

|

push edx

|

|

push eax

|

|

call fibonacci

|

|

add esp, 4

|

|

pop edx

|

|

pop ecx

|

|

add ecx, eax

|

|

mov eax, ecx

|

|

ret

|

|

|

|

## Other problems with this approach

|

|

|

|

Short-circuit evaluation is when the evaluation of an expression is guaranteed to stop once the output is already known. For example, if in `A || B` `A` is already truthy, then `B` is not evaluated. This is not just an optimization, but an important semantical feature, as evaluation of the operands may have side-effects.

|

|

|

|

Let us write `if(x == 1 || y == 1) { do stuff; }` in x86:

|

|

|

|

cmp eax, 1

|

|

je .L1

|

|

cmp ebx, 1

|

|

jne .L2

|

|

.L1:

|

|

; do stuff

|

|

.L2:

|

|

|

|

Note that the two jump instructions are basically goto statements. As the Nectar IR is defined without gotos, it is practically impossible for the compiler to output the neat code shown above. You could insert special logic for this case, but in general it'll fail.

|

|

|

|

Even worse, the dumbification pass will try to move the condition into a variable and dumbify it further, creating the following:

|

|

|

|

u1 z = x == 1;

|

|

u1 w = y == 1;

|

|

u1 k = z || w;

|

|

if(k) {

|

|

do stuff;

|

|

}

|

|

|

|

And now suddenly we need 2 new registers for no reason..

|

|

|

|

In conclusion, an optimized IR should not use self-contained blocks, but should actually be flat, like the real thing, and have goto statements. Fixing this in nctref will require extreme refactoring, as the notion of blocks forming a tree is ingrained. Also, statements within a block form a singly-linked list. Even if we there were a simple `ASTStmtGoto` node, it cannot be a simple pointer to a statement, because passes need to modify the AST. For the time being, I have given up on short-circuit evaluation, and I do not actually support neither `||` nor `&&`.

|

|

|

|

## Adding a Feature

|

|

|

|

When adding a feature, first write it out in Nectar in the ideal dumbified form. Make sure this compiles correctly. Afterward, implement dumbification rules so that code can be written in any fashion. If specific colorings are required, then the pre-coloring and spill2var passes must be updated. The following is an example with multiplication, as this is what I'm adding as of writing.

|

|

|

|

Note the way `mul` works on x86. Firstly, one of the operands is the destination, because `mul` is a 2-op instruction. Secondly, the other operand cannot be an immediate, because it is defined as r/m (register or memory), so if the second operand is a constant, it must be split into a variable (`varify` in `dumberdowner.c`). Thirdly, the destination must be the A register, so one of the operands must be pre-colored to A. Fourthly, `mul` clobbers the D register with the high half of the product. In other words, we have an instruction with *two* output registers, which the Nectar AST does not support. But we can't have the register allocator assign anything to D here.

|

|

|

|

To account for this, we can have a second assignment statement right next to the multiplication. Because the main multiplication clobbers the source operand, the mulhi assignment must come before the mul. Putting all this together, this is the canonical way to do `z = x * y` with an x86 target:

|

|

|

|

z = x;

|

|

w = z *^ y;

|

|

z = z * y;

|

|

|

|

But this is without pre-coloring. We want precolored nodes to live as little as possible, because separately solving pre-coloring collisions whilst also keeping the code dumbified *and* not horrible is pretty much impossible. I've tried.

|

|

|

|

k = x;

|

|

w = k *^ y;

|

|

k = k * y;

|

|

z = k;

|

|

|

|

Where `k` and `w` are the VTEs that must live and die immediately. The dumbifier checks if `w` and `k` are already precolored to not repeat forever, but it would be better to check the UD-chain.

|

|

|

|

Lastly, the codegen pass must recognize the above sequence as a multiplication and emit a single `mul` instruction.

|

|

|

|

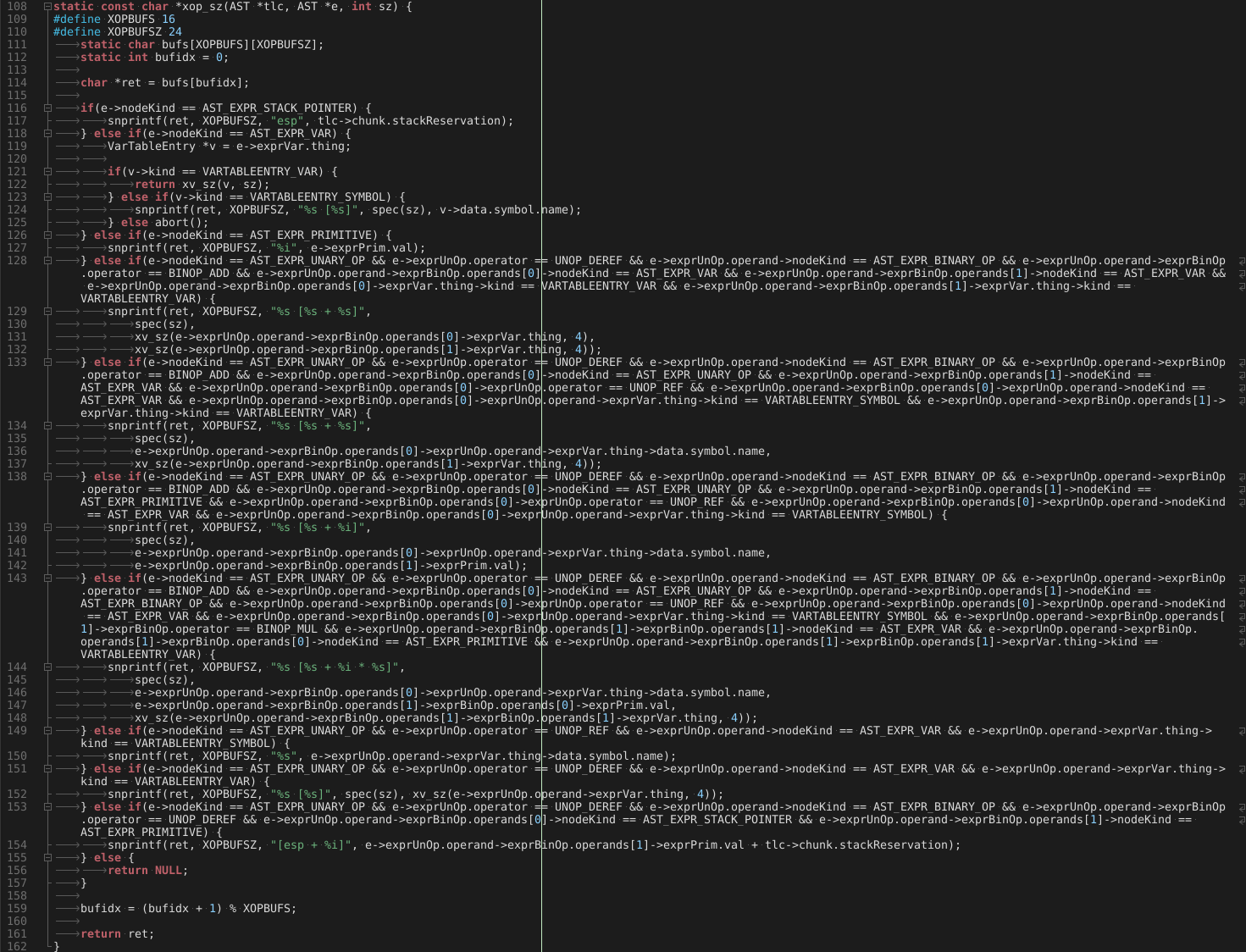

In `cg.c` is a function called `xop`, which returns an x86 operand string, given a trivially compilable Nectar expression. Because we've guaranteed the other operand may not be a constant, we do not need to check the XOP type, but it's a good idea to insert `assert`s and `abort`s everywhere to prevent hard-to-find bugs.

|

|

|

|

Once all that is done and tested, now we can add the following dumbification rules: all binary operations with the operand `AST_BINOP_MUL` or `AST_BINOP_MULHI` must be the whole expression within an assignment statement. If not, extract into a separate assignment & new variable with `varify`. The destination of the assignment, and both operands of the binary operation must be of type `AST_EXPR_VAR`, with their corresponding variables being of type `VARTABLEENTRY_VAR`, not `VARTABLEENTRY_SYMBOL` or `VARTABLEENTRY_TYPE`. If any of those don't apply, `varify` the offenders. Each such assignment have a neighboring, symmetric assignment, so that both A and D are caught by the pre-coloring pass.

|

|

|

|

A common bug when writing a dumbification rule is ending up with one that is always successful. If this happens, the compiler will become stuck endlessly dumbifying, which is nonsense. It would be nice if you could formally prove that won't happen. Another common bug is not realizing the order in which dumbification rules are applied matters :).

|

|

|

|

You know, I really regret writing this in C.

|

|

|

|

|

|

|

|

Oh God.. and for what? So it runs on MS-DOS?? Was it worth it? It doesn't even work there; it crashes!

|

|

|

|

You know, I understand if you don't want to help.

|